Abstract

A typical pipeline for multi-object tracking (MOT) is to use a detector for object localization, and following re-identification (re-ID) for object association. This pipeline is partially motivated by recent progress in both object detec- tion and re-ID, and partially motivated by biases in existing tracking datasets, where most objects tend to have distin- guishing appearance and re-ID models are sufficient for es- tablishing associations. In response to such bias, we would like to re-emphasize that methods for multi-object tracking should also work when object appearance is not sufficiently discriminative. To this end, we propose a large-scale dataset for multi-human tracking, where humans have similar appearance, diverse motion and extreme articulation. As the dataset contains mostly group dancing videos, we name it “DanceTrack”. We expect DanceTrack to provide a better platform to develop more MOT algorithms that rely less on visual discrimination and depend more on motion analysis. We benchmark several state-of-the-art trackers on our dataset and observe a significant performance drop on DanceTrack when compared against existing benchmarks.

Dataset

Scene samples from DanceTrack. (a) outdoor scene, (b) low-lighting scene, (c) large group of dancing people; (d) gymnastics scene where the motion is usually even more diverse and people have more aggressive deformation.

DanceTrack consists of:

- 100 videos of group dance, 40 training videos, 25 validation videos and 35 test videos

- 990 unique instances with average length of 52.9s

- 105k frames and 877k high-quality bounding boxes by 20fps annotation

Results

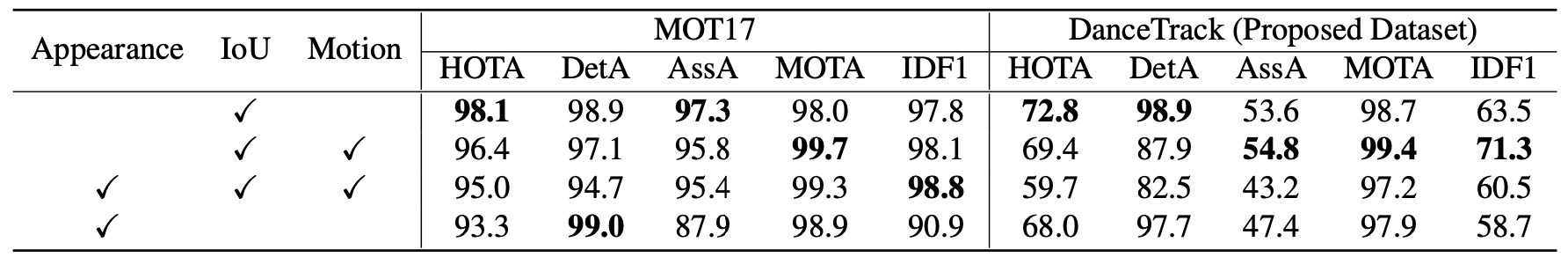

Oracle Analysis

Oracle analysis of different association models on MOT17 and DanceTrack validation set, where the detection boxes are ground-truth boxes. The result shows the evident increased difficulty of performing multi-object tracking on DanceTrack than MOT17 dataset.

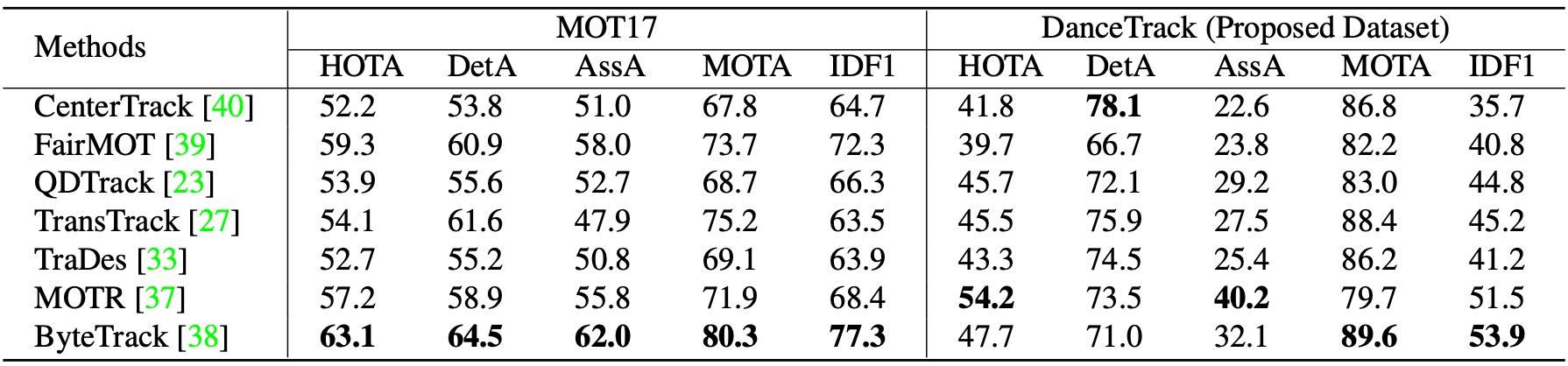

Benchmark Result

Benchmark results of investigated algorithms on MOT17 and DanceTrack test set. DanceTrack makes detection easier (higher MOTA and DetA scoers) but still brings significant tracking performance drop compared to MOT17 (lower HOTA, AssA and IDF1 scores). This result reveals the bottleneck of multi-object tracking on DanceTrack is on the association part.

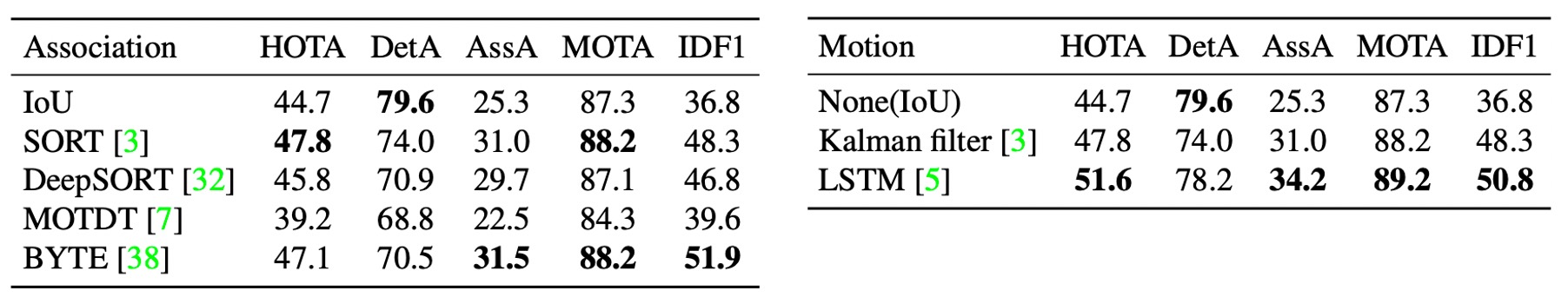

Association Strategy

Comparisons of different association strategies on DanceTrack validation set. The detection results are output by the same YOLOX detector. Both Kalman filter and LSTM outperform naive IoU association by a large margin, indicating the great potential of motion models in tracking objects, especially when appearance cues are not reliable. We expect to see more researches in this field.

Citation

@inproceedings{sun2022dance,

title={DanceTrack: Multi-Object Tracking in Uniform Appearance and Diverse Motion},

author={Sun, Peize and Cao, Jinkun and Jiang, Yi and Yuan, Zehuan and Bai, Song and Kitani, Kris and Luo, Ping},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}

License

The annotations of DanceTrack are licensed under a Creative Commons Attribution 4.0 License. The dataset of DanceTrack is available for non-commercial research purposes only. All videos and images of DanceTrack are obtained from the Internet which are not property of HKU, CMU or ByteDance. These three organizations are not responsible for the content nor the meaning of these videos and images.

Acknowledgement

We would like to thank the annotator teams and coordinators. We also like to thank Xinshuo Weng, Yifu Zhang for valuable discussion and suggestions, Vivek Roy, Pedro Morgado, Shuyang Sun for proof reading. This website is developed referring to GLAMR.